For some time now I’ve wanted to move away from my QNAP NAS boxes. I have been tossing around with a number of ideas but this is what I finally came up with.

Before we begin I must say that I have been using QNAP for over 10 years, these boxes have been great and offered good value. My last QNAP is my fourth NAS in about 10 years.

However my entire LVM failed on me last year (Read about Restoring a 38TB NAS on this site) and QNAP support was very un-friendly forcing me to recover the entire NAS drives outside of the QNAP with the help of Diskinternals who where super friendly, and offered VMFS support as I had a few iSCSI targets on my ESX clusters. My backup QNAP is also about to fail as S.M.A.R.T status is not into my favor on 1-2 drives in my 8 drive config. So my main NAS is not working great, has hardware issues (that caused the LVM problem, the reseller has gone bankrupt and my backup NAS has drives that could break any time – Time to review my storage plan.

My plan is to have two enclosures hosting my main NAS and my backup NAS respectively. The main NAS will be connected to a dedicated server with its own RAID controller. The backup NAS will be connected to a dedicated RAID controller that will be passed through in VMware to a VM hosting the backup NAS. The VM option is at the moment just a test as I prefer to have dedicated hardware for the backup as well.

NAS Hardware

One of the main reasons for geting a NAS is the form factor. My compute resources are located in two closets that does not offer that great depth to rack a standard server.

The first decision was, based on the above quite hard as the space constraints would limit my options. After a while I came to the conclusion that the Lenovo SA120 would be the best fit. However these are quite expensive, most of them found on eBay only have one PSU, I’d like to have two, one connected to my UPS and one to the mains. I would also prefer two SAS controllers. So I found my two Lenovo enclosures on eBay, together with two controllers. All great! – Now the quest for two additional PSUs began and it was a painful hunt. However I found two in Italy a few weeks ago and the cost was almost the same as for an entire enclosure. I will come to costs of the build later in the post.

Having these enclosures in my rack was great. I had to do some reorganization in the rack as the shelfs I planned to place the enclosures on did not fit the enclosure, they are really 19″ wide and not a mm to space. It worked however if I mounted the self up-side-down (doh!) and they are rock solid. That means I can’t have a 19″ unit below the NAS, but I won’t have that in any case as I have mounted the enclosures in each rack at the bottom where the intake from the FANs sits cooling the drives well.

Main NAS

The main NAS has 10 x 6 TB SAS (NL) drives (HGST), 9 in a Raid-5 setup and one as a hot spare, in a Lenovo SA120 enclosure. This allows for an expansion of 2 drives if/when needed. The NAS is connected to one LSI MegaRaid SAS 9268CV-8e Raid controller with BBU and CacheVault (1GB RAM) that sits in my HP DL20 Windows Server 2016 datacenter server with Storage Spaces. In addition to the LSI card I’ve popped in an Intel X520-2 10 Gigabit SFP+ network card for the speed.

Backup NAS

The backup NAS has 10 x 2 TB SAS (NL) drives (HP), 9 in a Raid-5 setup and one as a ot spare, in a Lenovo SA120 enclosure. This allows for an expansion of 2 drives if/when needed. The NAS is connected to one LSI MegaRaid SAS 9268CV-8e Raid controller with BBU and CacheVault (1GB RAM) that is passed on to a virtual machine running Windows Server 2016 datacenter with Storage Spaces. The plan was to use my 8 x 6 TB SATA drives that has been sitting in my QNAP storage, but as I wanted to run the system in parallel I found a few SAS drives that I decided to use. I should probably change these at some point as I would be able to run a backup of my entire main NAS.

Lenovo SA120

The Lenovo SA120 enclosures are perfect for my use case. They are only 15.19″ in depth that fits my closet almost perfectly. Having in total 24 drives will allow me to expand and as these are just generic SAS/SATA enclosures I can upgrade my drives over time. Having three empty slots would give me flexibility in terms of expanding my storage when/if needed. I do miss the 2.5″ drive bays as I don’t have these, but they are way to expensive to justify a few cache drives. I would most likely have more usage for them if I’d have gone the JBOD route with storage spaces and used the SSD cache auto-tiering.

HP DL20

As space in my closed is limited I’ve wailed for some time over my few options for servers. All my servers are DYI are based on the excellent Silverstone chassis but are therefore not 19″. This time I decided that I should at least try to find a server that could be used as a storage server where my requirements for CPU and memory are low. I did try with a Supermicro server but it was way to loud and deep to fit my rack and the cables. I’d wanted to get my hands on a HP server for some time now as my homlab adventure more or less started with the HP Microserver I decided to get one. These are quite expensive and hard to get your hands on – but so are most short depth servers. I looked at DELL as well but decided that I’d rather use hardware that I have more recent knowledge about. I have a quite large inventory of HP Raid cards as well as NICs etc. that can be tricky to use elsewhere. The LSI card worked fine, that was my main headache as non HP PCI gear can make the fans spin at 100%, this was not the case. However installing my Samsung SSD in a HP caddy and running that on the server caused the fans to run at 70% without settle down, the drive was detected including S.M.A.R.T values but as it was a non HP drive the server had to punish me. As the motherboard on this particular server only offers a SATA (even for the SFF drive bays) it was hard to solve the issue. I was in luck to find one drive we had scratched at work to be an 60 GB SATA HP drive. It came from an G8 server so I’d had to swap caddy with an G9 caddy that fits in the G10 server I’ve got and to my surprise that worked fine.

NAS Software.

For this experience I’m using Storage Spaces, not as an JBOD setup as I’d like to take advantage of the RAID card, but more as a way to get synchronous replication between the main and backup NAS as well as get SMB 3.0 Out Of the Box and easy iSCSI taget support, as well as NFS support for some VMs where I need to mount the main NAS. All virtual disks are configured as “Raid-0” as they all sit on the Virtual Volumes from the RAID card. There is only one Storage Pool.

I have been sceptic about Storage Spaces as, a few years ago when I was testing Storage Spaces in Windows Server 2012 it fails on me. However this time I’ve been running more tests, and, perhaps more importantly I’m still relying on a Raid HBA for the managements of the drives.

Both servers (physical and VM) has the Lenovo Management software installed to monitor the temperature, fan speeds and more importantly allows me to control the fan speeds.

Performance

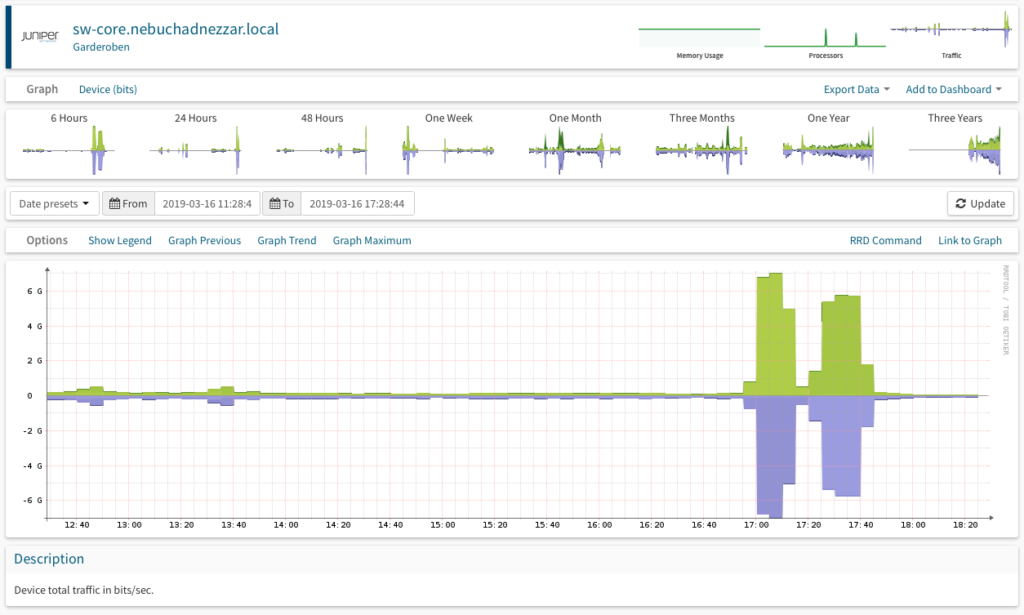

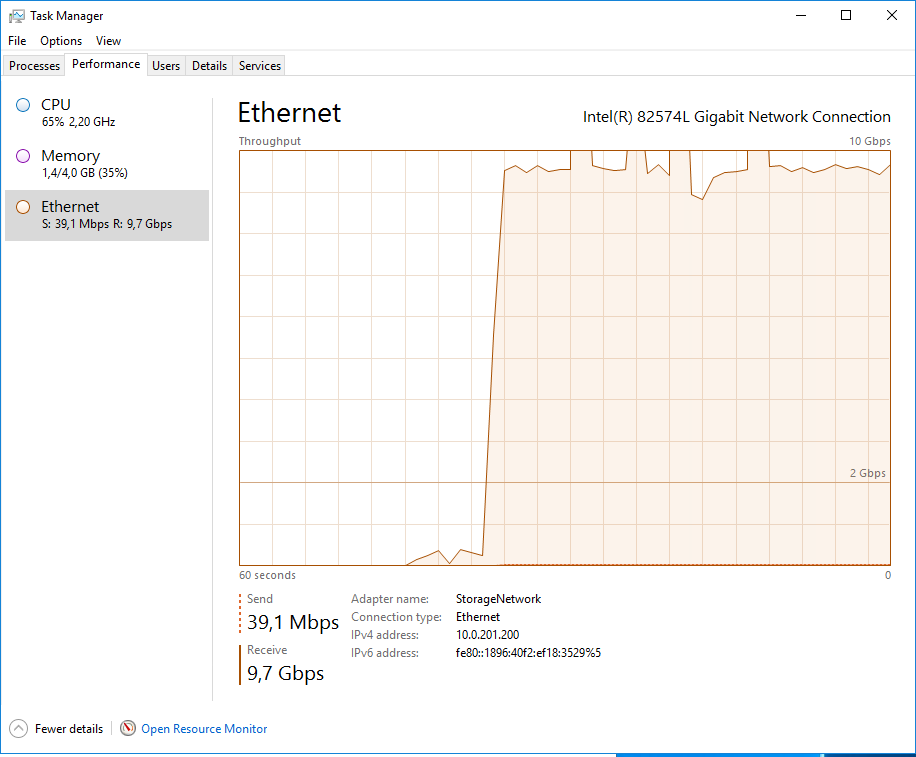

I must say that I’m super pleased with the performance of the two NAS servers. I’m using 10 Gigabit/s ethernet on both of the servers, a physical NIC at the first NAS, and a 10 Gigabit NIC on the ESXi host for the VM. My main computer (Mac mini) has 10 Gigabit as well, my entire networking stack consisting of 3 Juniper switches has 10 Gigabit networking. In fact, based on a few tests on read/write 10 Gigabit is to slow to keep up the data from the 9 disk arrays.

During a file copy work from the main NAS to the backup NAS my core switch reached 6 Gigabit/s – 750 MB/s.

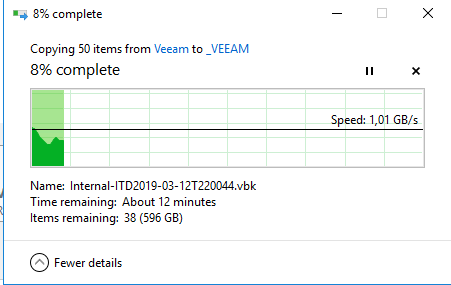

This was improved during a later migration reaching speeds of 1 GB/s.

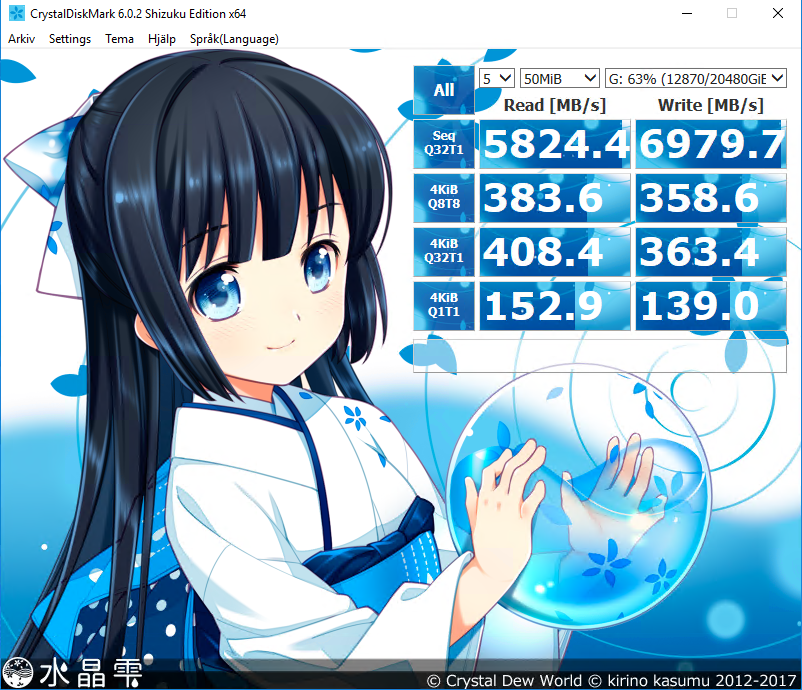

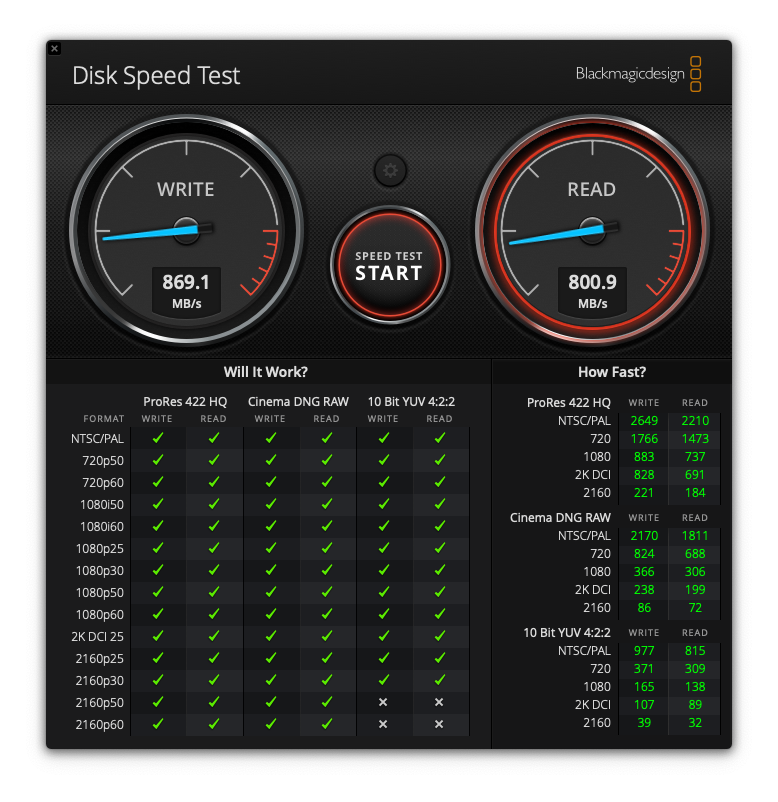

I had to run a disk speed test on my SMB 3.0 share from my Mac Mini. Not complaining here. My Mac mini is connected with Thunderbolt 3 to an external PCI enclosure hosting a dual 10 Gigabit Intel SFP+ network card.

Not entirely real-world test as I’m using a 50MB file, but reached almost 7 GB/s write performance (must be leveraging the CacheVault on the MegaRaid card) – That’s 56 Gigabit/s. The Q1T1 is dam impressive.

Not entirely real-world test as I’m using a 50MB file, but reached almost 7 GB/s write performance (must be leveraging the CacheVault on the MegaRaid card) – That’s 56 Gigabit/s. The Q1T1 is dam impressive.

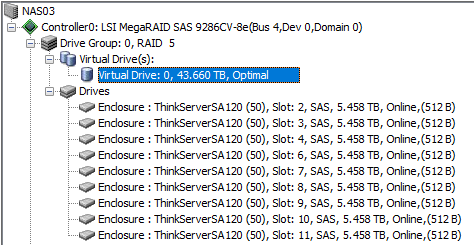

43 TB Virtual Drive

Just started the asynchronous replication between the two enclosures (NASs)